MicroK8s in LXDでMongoDBを使用したPHPのゲストブックアプリケーションのデプロイ

前回内容

やること

を使って と をちゃんぽんしたような事をやります

事前準備

エイリアス設定

前回lxc exec 〜が冗長すぎたのでエイリアス切りました

$ alias|grep lxc

alias kubectl='lxc exec mk8s1 -- kubectl'

alias microk8s='lxc exec mk8s1 -- microk8s'

k8sクラスタ

前回と同じようにmk8s1~4の4台構成のk8s環境を作って確認してます

なんとなく内容的に1台あれば十分な気もするのでそこらへんは適宜調整して下さい。

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

mk8s2 Ready <none> 5h27m v1.20.5-34+40f5951bd9888a

mk8s4 Ready <none> 5h24m v1.20.5-34+40f5951bd9888a

mk8s1 Ready <none> 4d3h v1.20.5-34+40f5951bd9888a

mk8s3 Ready <none> 5h25m v1.20.5-34+40f5951bd9888a

$ kubectl get pods

No resources found in default namespace.

metalLBの有効化

今回MicroK8sに付属のmetalLBアドオンを使って外部ロードバランサの代わりにするのでlxcでDHCPされるアドレスの範囲を変更してmetalLBで割り当てるIPとかぶらないようにします。

まずはlxcで使うアドレス帯の設定

$ lxc network set lxdbr0 ipv4.dhcp.ranges 10.116.214.100-10.116.214.254

$ lxc network show lxdbr0

config:

ipv4.address: 10.116.214.1/24

ipv4.dhcp.ranges: 10.116.214.100-10.116.214.254

ipv4.nat: "true"

ipv6.address: fd42:d878:2ae:7d9e::1/64

ipv6.nat: "true"

description: ""

name: lxdbr0

type: bridge

used_by:

- /1.0/instances/mk8s1

- /1.0/instances/mk8s2

- /1.0/instances/mk8s3

- /1.0/profiles/default

- /1.0/profiles/myDefault

managed: true

status: Created

locations:

- none

これで10.116.214.100-10.116.214.254の範囲がlxcで割り振られるようになりました。

つづいてmetalLBの設定

$ microk8s enable metallb

Enabling MetalLB

Enter each IP address range delimited by comma (e.g. '10.64.140.43-10.64.140.49,192.168.0.105-192.168.0.111'): 10.116.214.2-10.116.214.99

Applying Metallb manifest

namespace/metallb-system created

secret/memberlist created

podsecuritypolicy.policy/controller created

podsecuritypolicy.policy/speaker created

serviceaccount/controller created

serviceaccount/speaker created

clusterrole.rbac.authorization.k8s.io/metallb-system:controller created

clusterrole.rbac.authorization.k8s.io/metallb-system:speaker created

role.rbac.authorization.k8s.io/config-watcher created

role.rbac.authorization.k8s.io/pod-lister created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker created

rolebinding.rbac.authorization.k8s.io/config-watcher created

rolebinding.rbac.authorization.k8s.io/pod-lister created

daemonset.apps/speaker created

deployment.apps/controller created

configmap/config created

MetalLB is enabled

これで10.116.214.2-10.116.214.99がmetalLBから割り振られるようになります

CoreDNSの有効化

これもMicroK8sのアドオンなのでコマンド一発です

フロントエンドからバックエンドへ通信する際に名前解決してるのですが

これを有効にしてないと解決に失敗してエラーがでました。

k8sをあまりわかってないのでもっと簡単な方法があるかも知れませんので知ってたら教えて下さい。

$ microk8s enable dns

Enabling DNS

Applying manifest

serviceaccount/coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

clusterrole.rbac.authorization.k8s.io/coredns created

clusterrolebinding.rbac.authorization.k8s.io/coredns created

Restarting kubelet

Adding argument --cluster-domain to nodes.

Configuring node 10.116.214.109

Configuring node 10.116.214.195

Configuring node 10.116.214.113

Configuring node 10.116.214.130

Adding argument --cluster-dns to nodes.

Configuring node 10.116.214.109

Configuring node 10.116.214.195

Configuring node 10.116.214.113

Configuring node 10.116.214.130

Restarting nodes.

Configuring node 10.116.214.109

Configuring node 10.116.214.195

Configuring node 10.116.214.113

Configuring node 10.116.214.130

DNS is enabled

MongoDBを使用したPHPのゲストブックアプリケーションのデプロイ

ここからはほぼ以下のチュートリアル通り進みます

MongoDBの立ち上げ

$ kubectl apply -f https://k8s.io/examples/application/guestbook/mongo-deployment.yaml

deployment.apps/mongo created

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

mongo-75f59d57f4-nwqg6 1/1 Running 0 109s

$ kubectl logs deployment/mongo

2021-04-20T08:19:40.413+0000 I CONTROL [main] Automatically disabling TLS 1.0, to force-enable TLS 1.0 specify --sslDisabledProtocols 'none'

2021-04-20T08:19:40.420+0000 W ASIO [main] No TransportLayer configured during NetworkInterface startup

2021-04-20T08:19:40.421+0000 I CONTROL [initandlisten] MongoDB starting : pid=1 port=27017 dbpath=/data/db 64-bit host=mongo-75f59d57f4-nwqg6

2021-04-20T08:19:40.421+0000 I CONTROL [initandlisten] db version v4.2.13

2021-04-20T08:19:40.421+0000 I CONTROL [initandlisten] git version: 82dd40f60c55dae12426c08fd7150d79a0e28e23

2021-04-20T08:19:40.421+0000 I CONTROL [initandlisten] OpenSSL version: OpenSSL 1.1.1 11 Sep 2018

2021-04-20T08:19:40.421+0000 I CONTROL [initandlisten] allocator: tcmalloc

2021-04-20T08:19:40.421+0000 I CONTROL [initandlisten] modules: none

2021-04-20T08:19:40.421+0000 I CONTROL [initandlisten] build environment:

2021-04-20T08:19:40.421+0000 I CONTROL [initandlisten] distmod: ubuntu1804

2021-04-20T08:19:40.421+0000 I CONTROL [initandlisten] distarch: x86_64

2021-04-20T08:19:40.421+0000 I CONTROL [initandlisten] target_arch: x86_64

2021-04-20T08:19:40.421+0000 I CONTROL [initandlisten] options: { net: { bindIp: "0.0.0.0" } }

2021-04-20T08:19:40.426+0000 I STORAGE [initandlisten] wiredtiger_open config: create,cache_size=7480M,cache_overflow=(file_max=0M),session_max=33000,eviction=(threads_min=4,threads_max=4),config_base=false,statistics=(fast),log=(enabled=true,archive=true,path=journal,compressor=snappy),file_manager=(close_idle_time=100000,close_scan_interval=10,close_handle_minimum=250),statistics_log=(wait=0),verbose=[recovery_progress,checkpoint_progress],

2021-04-20T08:19:41.618+0000 I STORAGE [initandlisten] WiredTiger message [1618906781:618384][1:0x7ff306b03b00], txn-recover: Set global recovery timestamp: (0, 0)

2021-04-20T08:19:41.626+0000 I RECOVERY [initandlisten] WiredTiger recoveryTimestamp. Ts: Timestamp(0, 0)

2021-04-20T08:19:41.640+0000 I STORAGE [initandlisten] No table logging settings modifications are required for existing WiredTiger tables. Logging enabled? 1

2021-04-20T08:19:41.641+0000 I STORAGE [initandlisten] Timestamp monitor starting

2021-04-20T08:19:41.643+0000 I CONTROL [initandlisten]

2021-04-20T08:19:41.643+0000 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

2021-04-20T08:19:41.643+0000 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

2021-04-20T08:19:41.643+0000 I CONTROL [initandlisten]

2021-04-20T08:19:41.646+0000 I STORAGE [initandlisten] createCollection: admin.system.version with provided UUID: eb79888d-b41f-4173-9934-67a966bb0a18 and options: { uuid: UUID("eb79888d-b41f-4173-9934-67a966bb0a18") }

2021-04-20T08:19:41.654+0000 I INDEX [initandlisten] index build: done building index _id_ on ns admin.system.version

2021-04-20T08:19:41.655+0000 I SHARDING [initandlisten] Marking collection admin.system.version as collection version: <unsharded>

2021-04-20T08:19:41.655+0000 I COMMAND [initandlisten] setting featureCompatibilityVersion to 4.2

2021-04-20T08:19:41.656+0000 I SHARDING [initandlisten] Marking collection local.system.replset as collection version: <unsharded>

2021-04-20T08:19:41.659+0000 I STORAGE [initandlisten] Flow Control is enabled on this deployment.

2021-04-20T08:19:41.660+0000 I SHARDING [initandlisten] Marking collection admin.system.roles as collection version: <unsharded>

2021-04-20T08:19:41.661+0000 I STORAGE [initandlisten] createCollection: local.startup_log with generated UUID: 1ff56143-4e14-4bc3-bd46-91f32b7047b8 and options: { capped: true, size: 10485760 }

2021-04-20T08:19:41.675+0000 I INDEX [initandlisten] index build: done building index _id_ on ns local.startup_log

2021-04-20T08:19:41.676+0000 I SHARDING [initandlisten] Marking collection local.startup_log as collection version: <unsharded>

2021-04-20T08:19:41.676+0000 I FTDC [initandlisten] Initializing full-time diagnostic data capture with directory '/data/db/diagnostic.data'

2021-04-20T08:19:41.683+0000 I SHARDING [LogicalSessionCacheRefresh] Marking collection config.system.sessions as collection version: <unsharded>

2021-04-20T08:19:41.685+0000 I NETWORK [listener] Listening on /tmp/mongodb-27017.sock

2021-04-20T08:19:41.685+0000 I NETWORK [listener] Listening on 0.0.0.0

2021-04-20T08:19:41.685+0000 I NETWORK [listener] waiting for connections on port 27017

2021-04-20T08:19:41.691+0000 I CONTROL [LogicalSessionCacheReap] Sessions collection is not set up; waiting until next sessions reap interval: config.system.sessions does not exist

2021-04-20T08:19:41.691+0000 I STORAGE [LogicalSessionCacheRefresh] createCollection: config.system.sessions with provided UUID: d79907f7-f9f3-4d64-a403-6b289f1e315e and options: { uuid: UUID("d79907f7-f9f3-4d64-a403-6b289f1e315e") }

2021-04-20T08:19:41.701+0000 I INDEX [LogicalSessionCacheRefresh] index build: done building index _id_ on ns config.system.sessions

2021-04-20T08:19:41.711+0000 I INDEX [LogicalSessionCacheRefresh] index build: starting on config.system.sessions properties: { v: 2, key: { lastUse: 1 }, name: "lsidTTLIndex", ns: "config.system.sessions", expireAfterSeconds: 1800 } using method: Hybrid

2021-04-20T08:19:41.711+0000 I INDEX [LogicalSessionCacheRefresh] build may temporarily use up to 200 megabytes of RAM

2021-04-20T08:19:41.712+0000 I INDEX [LogicalSessionCacheRefresh] index build: collection scan done. scanned 0 total records in 0 seconds

2021-04-20T08:19:41.712+0000 I INDEX [LogicalSessionCacheRefresh] index build: inserted 0 keys from external sorter into index in 0 seconds

2021-04-20T08:19:41.714+0000 I INDEX [LogicalSessionCacheRefresh] index build: done building index lsidTTLIndex on ns config.system.sessions

2021-04-20T08:19:42.003+0000 I SHARDING [ftdc] Marking collection local.oplog.rs as collection version: <unsharded>

MongoDBのサービス登録

$ kubectl apply -f https://k8s.io/examples/application/guestbook/mongo-service.yaml

service/mongo created

$ kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.152.183.1 <none> 443/TCP 4d3h

mongo ClusterIP 10.152.183.12 <none> 27017/TCP 5s

フロントエンドの立ち上げ

$ kubectl apply -f https://k8s.io/examples/application/guestbook/frontend-deployment.yaml

deployment.apps/frontend created

$ kubectl get pods -l app.kubernetes.io/name=guestbook -l app.kubernetes.io/component=frontend

NAME READY STATUS RESTARTS AGE

frontend-848d88c7c-6kgv5 1/1 Running 0 6m55s

frontend-848d88c7c-5xv65 1/1 Running 0 6m55s

frontend-848d88c7c-rhzgb 1/1 Running 0 6m55s

フロントエンドのサービス登録

$ kubectl apply -f https://k8s.io/examples/application/guestbook/frontend-service.yaml

service/frontend created

$ kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.152.183.1 <none> 443/TCP 4d4h

mongo ClusterIP 10.152.183.12 <none> 27017/TCP 12m

frontend ClusterIP 10.152.183.52 <none> 80/TCP 15s

ポートフォワードでmk8s1の8080ポートとフロントエンドの80ポートをバインド

k$ lxc list mk8s1

+-------+---------+-----------------------------+----------------------------------------------+-----------+-----------+

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS |

+-------+---------+-----------------------------+----------------------------------------------+-----------+-----------+

| mk8s1 | RUNNING | 10.116.214.195 (eth0) | fd42:d878:2ae:7d9e:216:3eff:fe81:8105 (eth0) | CONTAINER | 1 |

| | | 10.1.238.128 (vxlan.calico) | | | |

+-------+---------+-----------------------------+----------------------------------------------+-----------+-----------+

$ kubectl port-forward svc/frontend --address 0.0.0.0 8080:80

Forwarding from 0.0.0.0:8080 -> 80

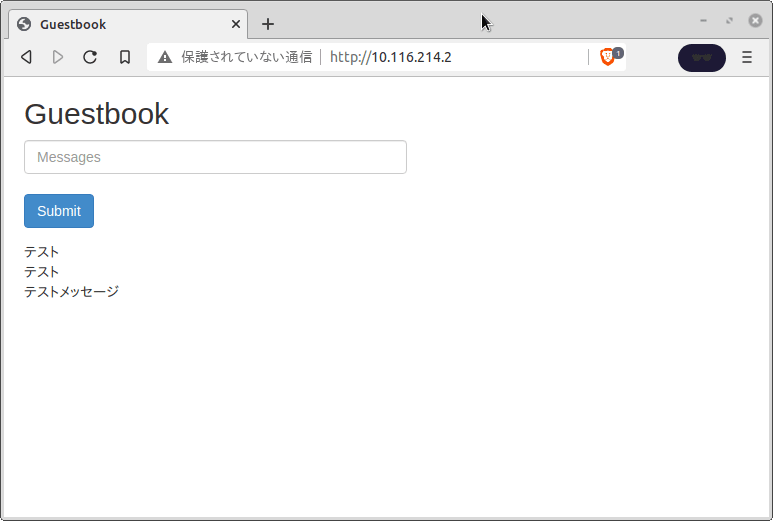

この状態でmk8s1のeth0にアクセスするとフロントエンドが開けて動く状態になっている。

サービスのコンフィグをいじってmetalLBから外部IPを割り当てる

ここからの内容は以下のチュートリアルを参考にしています。

今の状態だとmk8s1のノードにアクセスするしか画面を出せないので登録したサービス情報を変更して外部IPを割り当てる

$ kubectl edit service frontend

編集モードで開くので

type: ClusterIP

を

type: LoadBalancer

に書き換えたら

$ kubectl get service frontend

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

frontend LoadBalancer 10.152.183.52 10.116.214.2 80:31658/TCP 16m

これで外部IPとして10.116.214.2が割り当てられたのでここにアクセスするだけでさっきの画面が開けるようになる

サービス再登録で外部IPを割り当ててもいいかも

editするのミスったら面倒そうだしサービス消して再登録した方が確実かも知れない

$ kubectl delete service frontend

service "frontend" deleted

$ kubectl expose deployment frontend --type=LoadBalancer --name=frontend

service/frontend exposed

$ kubectl get service frontend

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

frontend LoadBalancer 10.152.183.74 10.116.214.2 80:32211/TCP 1s

これでも同じように画面が出る

終わりに

お金気にせずにAWSとかGKEとか使いまくれるならハマること少ないんだろうけど実際にやってみるとかなりハマった。。。

てか日本語チュートリアルちゃんと更新しといてくれよ。。。あれが一番の戦犯だよ。。。

とりあえずこの調子で行けるところまでいってみようかな

それではまた

しゃみしゃっきり〜

P.S.

独学オンプレ環境で誰も有識者が周りに居ないと自分がやってることが本当に正しいのかどうかもわからないから凄い変なことしてる可能性もあって怖い。。。

有識者がここ見てたら色々助言が欲しいです。

Discussion