MicroK8sの独習3 〜Redisを使用したPHPのゲストブックアプリケーションのデプロイ〜

これうまいこといかん。。。

もう面倒やから4台クラスタ状態をスナップショットとっとく

$ seq 4 | xargs -I{} -P4 -n1 lxc launch mk8s mk8s{};seq 4 | xargs -I{} -P4 -n1 lxc exec mk8s{} -- microk8s status | grep nodes;for i in $(seq 2 4) ; do lxc exec mk8s1 -- microk8s add-node | tail -n1 | xargs lxc exec mk8s$i -- ; done

Creating mk8s4

Creating mk8s3

Creating mk8s1

Creating mk8s2

Starting mk8s1

Starting mk8s3

Starting mk8s4

Starting mk8s2

Contacting cluster at 10.116.214.195

Waiting for this node to finish joining the cluster. ..

Contacting cluster at 10.116.214.195

Waiting for this node to finish joining the cluster. ..

Contacting cluster at 10.116.214.195

Waiting for this node to finish joining the cluster. ..

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

mk8s3 Ready <none> 13m v1.20.5-34+40f5951bd9888a

mk8s4 Ready <none> 12m v1.20.5-34+40f5951bd9888a

mk8s1 Ready <none> 3d22h v1.20.5-34+40f5951bd9888a

mk8s2 Ready <none> 15m v1.20.5-34+40f5951bd9888a

$ tmux save-buffer - | xsel --clipboard

$ lxc snapshot mk8s1

$ lxc snapshot mk8s2

$ lxc snapshot mk8s3

$ lxc snapshot mk8s4

$ lxc list

+-------+---------+-----------------------------+----------------------------------------------+-----------+-----------+

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS |

+-------+---------+-----------------------------+----------------------------------------------+-----------+-----------+

| mk8s1 | RUNNING | 10.116.214.195 (eth0) | fd42:d878:2ae:7d9e:216:3eff:fe81:8105 (eth0) | CONTAINER | 1 |

| | | 10.1.238.128 (vxlan.calico) | | | |

+-------+---------+-----------------------------+----------------------------------------------+-----------+-----------+

| mk8s2 | RUNNING | 10.116.214.109 (eth0) | fd42:d878:2ae:7d9e:216:3eff:fee1:134f (eth0) | CONTAINER | 1 |

| | | 10.1.115.128 (vxlan.calico) | | | |

+-------+---------+-----------------------------+----------------------------------------------+-----------+-----------+

| mk8s3 | RUNNING | 10.116.214.130 (eth0) | fd42:d878:2ae:7d9e:216:3eff:feac:3825 (eth0) | CONTAINER | 1 |

| | | 10.1.217.192 (vxlan.calico) | | | |

+-------+---------+-----------------------------+----------------------------------------------+-----------+-----------+

| mk8s4 | RUNNING | 10.116.214.113 (eth0) | fd42:d878:2ae:7d9e:216:3eff:fe59:af6d (eth0) | CONTAINER | 1 |

| | | 10.1.236.128 (vxlan.calico) | | | |

+-------+---------+-----------------------------+----------------------------------------------+-----------+-----------+

マスターノードの作成

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes/website/master/content/ja/examples/application/guestbook/redis-master-deployment.yaml

deployment.apps/redis-master created

$ kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

redis-master 1/1 1 1 87s

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes/website/master/content/ja/examples/application/guestbook/redis-master-service.yaml

service/redis-master created

$ kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.152.183.1 <none> 443/TCP 3d22h

redis-master ClusterIP 10.152.183.42 <none> 6379/TCP 10s

スレーブ作成

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes/website/master/content/ja/examples/application/guestbook/redis-slave-deployment.yaml

deployment.apps/redis-slave created

$ kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

redis-master 1/1 1 1 6m

redis-slave 2/2 2 2 2m13s

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes/website/master/content/ja/examples/application/guestbook/redis-slave-service.yaml

service/redis-slave created

$ kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.152.183.1 <none> 443/TCP 3d22h

redis-master ClusterIP 10.152.183.42 <none> 6379/TCP 5m16s

redis-slave ClusterIP 10.152.183.246 <none> 6379/TCP 12s

フロントエンド作成

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes/website/master/content/ja/examples/application/guestbook/frontend-deployment.yaml

deployment.apps/frontend created

$ kubectl get pods -l app=guestbook

NAME READY STATUS RESTARTS AGE

frontend-6c6d6dfd4d-q4gqh 1/1 Running 0 73m

frontend-6c6d6dfd4d-g8vlj 1/1 Running 0 73m

frontend-6c6d6dfd4d-7qgkw 1/1 Running 0 73m

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes/website/master/content/ja/examples/application/guestbook/frontend-service.yaml

service/frontend created

$ kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.152.183.1 <none> 443/TCP 4d

redis-master ClusterIP 10.152.183.42 <none> 6379/TCP 76m

redis-slave ClusterIP 10.152.183.246 <none> 6379/TCP 71m

frontend NodePort 10.152.183.60 <none> 80:30020/TCP 7s

やり直したけどやっぱりフロントエンドの画面がエラーで動かないと思ってyamlのdiffとったら

差分出てる。。。

$ diff <(wget -O - -q https://k8s.io/examples/application/guestbook/frontend-deployment.yaml) <(wget -O - -q https://raw.githubusercontent.com/kubernetes/website/master/content/ja/examples/application/guestbook/frontend-deployment.yaml)

1c1

< apiVersion: apps/v1

---

> apiVersion: apps/v1 # for versions before 1.9.0 use apps/v1beta2

6,7c6

< app.kubernetes.io/name: guestbook

< app.kubernetes.io/component: frontend

---

> app: guestbook

11,12c10,11

< app.kubernetes.io/name: guestbook

< app.kubernetes.io/component: frontend

---

> app: guestbook

> tier: frontend

17,18c16,17

< app.kubernetes.io/name: guestbook

< app.kubernetes.io/component: frontend

---

> app: guestbook

> tier: frontend

21,23c20,21

< - name: guestbook

< image: paulczar/gb-frontend:v5

< # image: gcr.io/google-samples/gb-frontend:v4

---

> - name: php-redis

> image: gcr.io/google-samples/gb-frontend:v4

30a29,36

> # Using `GET_HOSTS_FROM=dns` requires your cluster to

> # provide a dns service. As of Kubernetes 1.3, DNS is a built-in

> # service launched automatically. However, if the cluster you are using

> # does not have a built-in DNS service, you can instead

> # access an environment variable to find the master

> # service's host. To do so, comment out the 'value: dns' line above, and

> # uncomment the line below:

> # value: env

よしスナップショットから復旧や!

$ lxc stop mk8s{1,2,3,4}

$ seq 4 | xargs -n1 -P4 -I{} lxc restore mk8s{} snap0

$ lxc start mk8s{1,2,3,4}

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

mk8s2 Ready <none> 124m v1.20.5-34+40f5951bd9888a

mk8s1 Ready <none> 4d v1.20.5-34+40f5951bd9888a

mk8s3 Ready <none> 122m v1.20.5-34+40f5951bd9888a

mk8s4 Ready <none> 121m v1.20.5-34+40f5951bd9888a

$ kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-node-bm2kb 1/1 Running 1 124m

kube-system calico-node-m2k78 1/1 Running 1 123m

kube-system calico-node-rmbsc 1/1 Running 1 121m

kube-system calico-node-rs879 1/1 Running 1 126m

kube-system calico-kube-controllers-847c8c99d-5tcwh 1/1 Running 2 4d

OK便利便利

おいーーーー!

redisのyaml無いからおかしいと思ったらEnglish版からチュートリアル消えてMongDBになっとるやんけ!

フロントエンドのyaml使いまわしてるからおかしなってるやろこれ😠

とりあえず落ち着いてMongoDB版で試してみよう

話はそれからだ

まずはMongoDB立てる

$ kubectl apply -f https://k8s.io/examples/application/guestbook/mongo-deployment.yaml

deployment.apps/mongo created

$ kubectl get deployment/mongo

NAME READY UP-TO-DATE AVAILABLE AGE

mongo 1/1 1 1 47s

MongoDB用のサービス起動

$ kubectl apply -f https://k8s.io/examples/application/guestbook/mongo-service.yaml

service/mongo created

$ kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.152.183.1 <none> 443/TCP 4d

mongo ClusterIP 10.152.183.208 <none> 27017/TCP 5s

フロントエンド起動

$ kubectl apply -f https://k8s.io/examples/application/guestbook/frontend-deployment.yaml

deployment.apps/frontend created

$ kubectl get pods -l app.kubernetes.io/name=guestbook -l app.kubernetes.io/component=frontend

NAME READY STATUS RESTARTS AGE

frontend-848d88c7c-qc7nx 1/1 Running 0 4m5s

frontend-848d88c7c-pg2gb 1/1 Running 0 4m5s

frontend-848d88c7c-dj96j 1/1 Running 0 4m5s

フロントエンドのサービス起動

$ kubectl apply -f https://k8s.io/examples/application/guestbook/frontend-service.yaml

service/frontend created

$ kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.152.183.1 <none> 443/TCP 4d

mongo ClusterIP 10.152.183.208 <none> 27017/TCP 6m50s

frontend ClusterIP 10.152.183.53 <none> 80/TCP 8s

ココらへんまで大体redisと同じ流れやねマスタースレーブが無くなった感じか

だめや画面出たけどmongodbつながらないみたいなエラー出るぞ

英語がわからん

これってようはk8sクラスター内のネットワークからしかアクセスできない?

Creating the Frontend Service

The mongo Services you applied is only accessible within the Kubernetes cluster because the default type for a Service is ClusterIP. ClusterIP provides a single IP address for the set of Pods the Service is pointing to. This IP address is accessible only within the cluster.

If you want guests to be able to access your guestbook, you must configure the frontend Service to be externally visible, so a client can request the Service from outside the Kubernetes cluster. However a Kubernetes user you can use kubectl port-forward to access the service even though it uses a ClusterIP.

う〜んフロントエンドからバックエンドへの通信がうまく動いてないっぽいけどこれはどっからどこに通信走ってんだ。。

ブラウザから直接mongo行こうとしてるならつながらないのはなんとなくわかるけどフロントエンドサーバからmongo行ってるなら内部の通信が問題な気がするし。。。

これ多分内部で名前解決出来てないわ。。。

$ lxc exec mk8s1 -- kubectl exec -it frontend-848d88c7c-6cnh8 bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@frontend-848d88c7c-6cnh8:/var/www/html# cat

controllers.js guestbook.php index.html

root@frontend-848d88c7c-6cnh8:/var/www/html# grep mongo guestbook.php

// echo extension_loaded("mongodb") ? "loaded\n" : "not loaded\n";

$host = 'mongo';

$mongo_host = "mongodb+srv://$host/guestbook?retryWrites=true&w=majority";

$manager = new MongoDB\Driver\Manager("mongodb://$host");

echo '{"error": "An error occured connecting to mongo ' . $host . '"}';

$host = 'mongo';

$manager = new MongoDB\Driver\Manager("mongodb://$host");

echo '{"error": "An error occured connecting to mongo ' . $host . '"}';

root@frontend-848d88c7c-6cnh8:/var/www/html#

てことはDNS周りやな多分microk8sのアドオンにありそう

$ microk8s status | grep dns

dns # CoreDNS

$ microk8s enable dns

Enabling DNS

Applying manifest

serviceaccount/coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

clusterrole.rbac.authorization.k8s.io/coredns created

clusterrolebinding.rbac.authorization.k8s.io/coredns created

Restarting kubelet

Adding argument --cluster-domain to nodes.

Configuring node 10.116.214.130

Configuring node 10.116.214.113

Configuring node 10.116.214.109

Configuring node 10.116.214.195

Adding argument --cluster-dns to nodes.

Configuring node 10.116.214.130

Configuring node 10.116.214.113

Configuring node 10.116.214.109

Configuring node 10.116.214.195

Restarting nodes.

Configuring node 10.116.214.130

Configuring node 10.116.214.113

Configuring node 10.116.214.109

Configuring node 10.116.214.195

DNS is enabled

これで行けたかと思ったけどだめだった

podがまだdns認識出来てないんじゃないかな。。。

とりあえずクラスタ一回全部落として再起動したらどうやろか

フロントエンドも3つも立ち上げたら時間かかりそうやし2個に落として再起動しよう

$ kubectl scale deployment frontend --replicas=2

$ lxc stop mk8s{1,2,3,4}; lxc start mk8s{1,2,3,4}

$ seq 4 | xargs -I{} -P4 -n1 lxc exec mk8s{} -- microk8s status | grep nodes

datastore master nodes: 10.116.214.195:19001 10.116.214.109:19001 10.116.214.130:19001

datastore standby nodes: 10.116.214.113:19001

datastore master nodes: 10.116.214.195:19001 10.116.214.109:19001 10.116.214.130:19001

datastore standby nodes: 10.116.214.113:19001

datastore master nodes: 10.116.214.195:19001 10.116.214.109:19001 10.116.214.130:19001

datastore standby nodes: 10.116.214.113:19001

datastore master nodes: 10.116.214.195:19001 10.116.214.109:19001 10.116.214.130:19001

datastore standby nodes: 10.116.214.113:19001

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

frontend-848d88c7c-9lpc4 1/1 Running 1 9m35s

frontend-848d88c7c-6cnh8 1/1 Running 1 11m

mongo-75f59d57f4-mtqq2 1/1 Running 1 127m

んでプロキシつかって8080で外部に晒すと。。。

$ lxc list mk8s1

+-------+---------+-----------------------------+----------------------------------------------+-----------+-----------+

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS |

+-------+---------+-----------------------------+----------------------------------------------+-----------+-----------+

| mk8s1 | RUNNING | 10.116.214.195 (eth0) | fd42:d878:2ae:7d9e:216:3eff:fe81:8105 (eth0) | CONTAINER | 1 |

| | | 10.1.238.128 (vxlan.calico) | | | |

+-------+---------+-----------------------------+----------------------------------------------+-----------+-----------+

$ kubectl port-forward svc/frontend --address 0.0.0.0 8080:80

Forwarding from 0.0.0.0:8080 -> 80

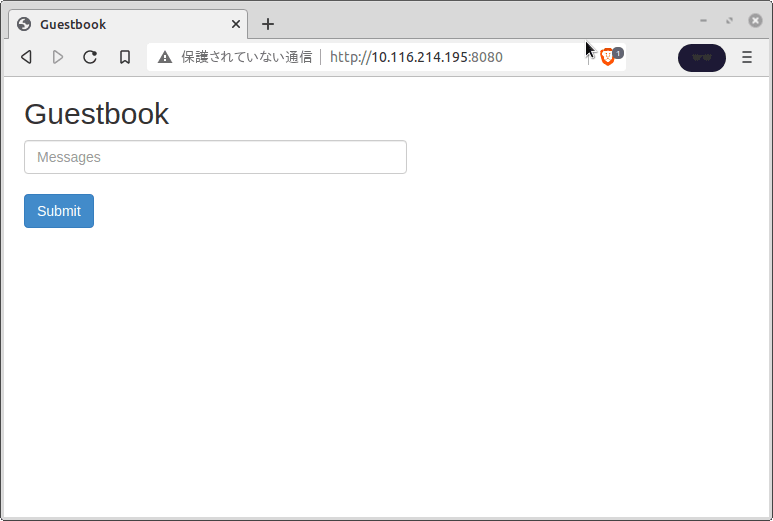

お

おおおお

いけたーー!

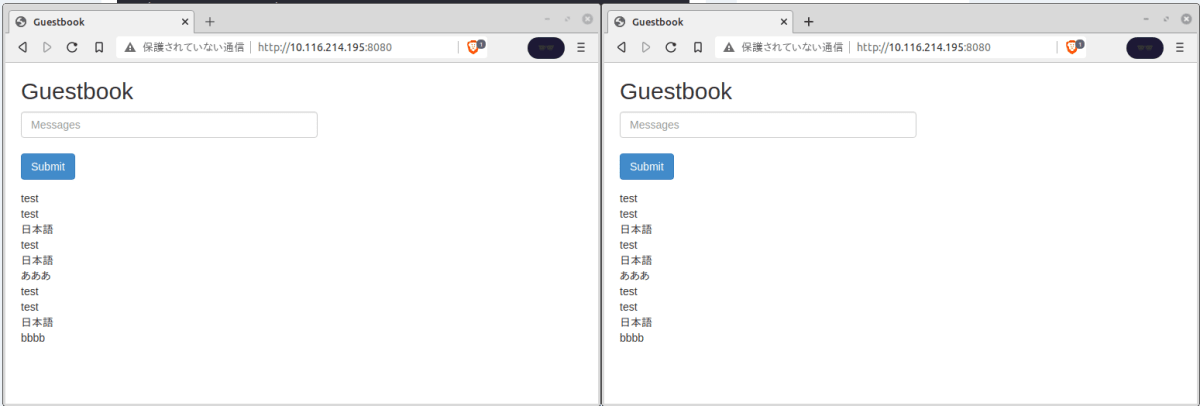

ソースみたらなんとなくバックエンドからmongoって名前で名前解決失敗してそうってのがわかったから良かったけどあれ無かったら迷宮入りやぞこれ

とりあえずkubectl port-forwardだとmk8s1に8080でアクセスするしかできなくなるからmetalLB有効にしてロードバランサ設定でIP降った方がええねこれ

てかリダイスのチュートリアル閉じとけよ。。。

流石に罠すぎんだろ。。。

とりあえずここまで忘れないように2〜3を記事化して閉じよう