MicroK8sの独習1 〜MicroK8s in LXDでk8sのクラスタ組んだりして動きを確認してみる〜

前々から気にはなっていたがよくわからないk8sを触ってみたい

なんか触れる環境を作るのが面倒くさくて後手後手になっていたのだがmicrok8sというのが簡単に使えそうだったのでやってみたいと思う。

やったことまとめた

とりあえず環境を汚したくないのでLXD上で実行する にLXD用のプロファイルがあるようだ

とりあえずプロファイルを当てて起動

lxc profile create mk8s

wget -O - -q https://raw.githubusercontent.com/ubuntu/microk8s/master/tests/lxc/microk8s-zfs.profile | lxc profile edit mk8s

lxc launch -p default -p mk8s ubuntu:20.04 mk8s

microk8sをインストール

$ lxc shell mk8s

root@mk8s:~# snap install microk8s --classic

コマンド何もわからない。。。

とりあえずこれでいいのか?

# microk8s kubectl get all

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.152.183.1 <none> 443/TCP 46s

なんかDockerHubのイメージ使えるっぽいなnginx上げてみよ

root@mk8s:~# microk8s kubectl create deployment nginx --image=nginx

deployment.apps/nginx created

成功してる気がするけどこれどうやって確認するんだ。。。

microk8s毎回うつのめんどいからとりあえずaliasしとこ

root@mk8s:~# alias kubectl="microk8s kubectl"

nginx動いてるか確認の仕方わからんかったけどこれで80ポートをホストにバインド出来るっぽいな

root@mk8s:~# kubectl expose deployment nginx --type=NodePort --port=80 --name=nginx

service/nginx exposed

LXDのIPの30591ポートにバインドされたっぽいね

root@mk8s:~# kubectl get service nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx NodePort 10.152.183.156 <none> 80:30591/TCP 80s

root@mk8s:~# w3m http://localhost:30591 -dump | head -n1

Welcome to nginx!

これローカルで確認するぶんにはええけど実際商用で動かすときってどうやってグローバルからコイツにアクセスさせるんや。。。

ここに来て公式ドキュメント発見

なんか/etc/rc.local作ってAppArmorの設定しろって書いてるけど動いてるな。。。

なんでや。。。。

まぁ動いてるっぽいしいいか。。。。

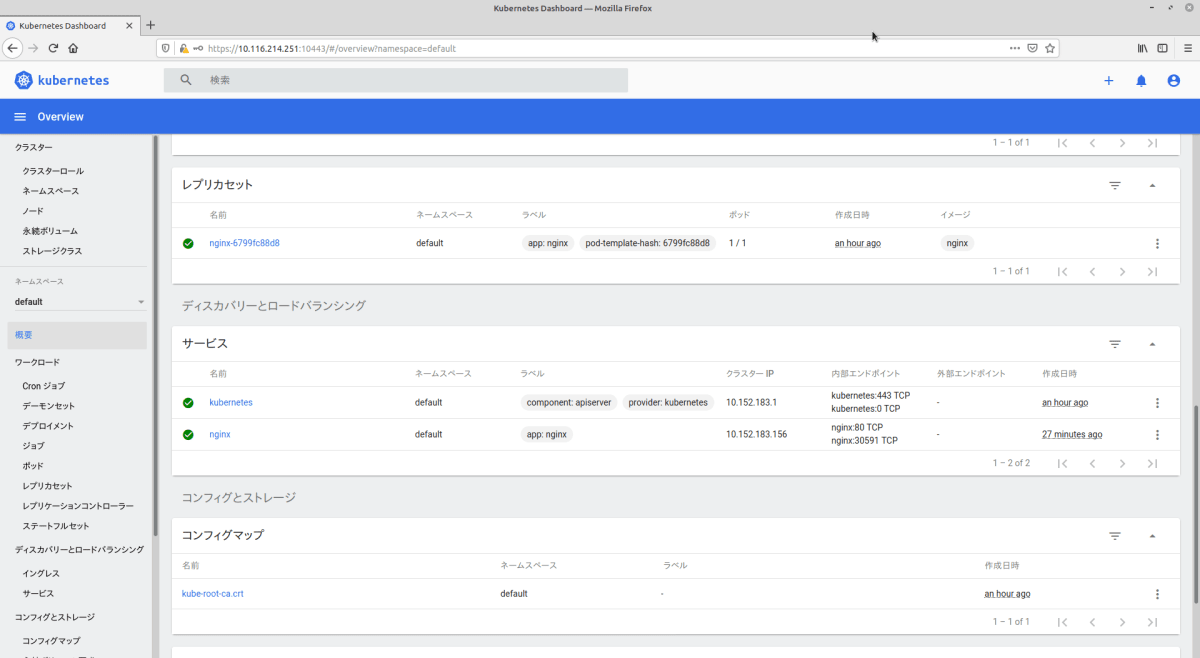

このDashboardとかいうの有効にしたらブラウザから触れるんかな

root@mk8s:~# microk8s dashboard-proxy

〜略〜

Dashboard will be available at https://127.0.0.1:10443

〜略〜

[1]+ Stopped microk8s dashboard-proxy

root@mk8s:~# bg

root@mk8s:~# ifconfig eth0

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.116.214.251 netmask 255.255.255.0 broadcast 10.116.214.255

inet6 fd42:d878:2ae:7d9e:216:3eff:fea8:9766 prefixlen 64 scopeid 0x0<global>

inet6 fe80::216:3eff:fea8:9766 prefixlen 64 scopeid 0x20<link>

ether 00:16:3e:a8:97:66 txqueuelen 1000 (Ethernet)

RX packets 87223 bytes 635565324 (635.5 MB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 51318 bytes 4114148 (4.1 MB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions

lxcのeth0に置き換えてブラウザからアクセスしたらええらしいから

やね

braveとchromeだと証明書怪しいって表示出来なかったのでFirefoxで見た

ダッシュボード起動時に出てるトークンを入れてログインするみたい

重くなってきたから再起動掛けたら動かなくなった😂

$ lxc shell mk8s

root@mk8s:~# microk8s status --wait-ready

cannot change profile for the next exec call: No such file or directory

う〜ん1からやり直してとりあえずmicrok8sインストールしたところで一回スナップショット取っとくか。。。

Session terminated, killing shell... ...killed.

$ lxc snapshot mk8s

とりあえず復旧したけど次なにしたらいいんやろ。。。

とりあえずもう一個作ってクラスタやってみようかな

とりあえず復習兼ねてもう一個作成

$ lxc launch -p default -p mk8s ubuntu:20.04 mk8s2

Creating mk8s2

Starting mk8s2

$ lxc exec mk8s2 -- snap install microk8s --classic

Run configure hook of "microk8s" snap if present - \

microk8s (1.20/stable) v1.20.5 from Canonical✓ installed

$ lxc exec mk8s2 -- microk8s status --wait-ready

microk8s is running

mk8s2からmk8sに接続する方法は

$ lxc exec mk8s -- microk8s add-node

From the node you wish to join to this cluster, run the following:

microk8s join 10.116.214.252:25000/1e348e8a8abc9471fc92a1be70f5b6cb

If the node you are adding is not reachable through the default interface you can use one of the following:

microk8s join 10.116.214.252:25000/1e348e8a8abc9471fc92a1be70f5b6cb

で出てくるからこれをmk8s2で実行したら。。。

$ lxc exec mk8s2 -- microk8s join 10.116.214.252:25000/1e348e8a8abc9471fc92a1be70f5b6cb

Contacting cluster at 10.116.214.252

Waiting for this node to finish joining the cluster. ..

成功したか?

$ lxc exec mk8s -- microk8s kubectl get no

NAME STATUS ROLES AGE VERSION

mk8s Ready <none> 46m v1.20.5-34+40f5951bd9888a

mk8s2 Ready <none> 101s v1.20.5-34+40f5951bd9888a

いけたっぽい

てかこれmicrok8sのクラスタ構成方法っぽいけど一般的なk8sってどうやってクラスタ組んでるんやろ。。。

これnginx両方で動いてる?

$ lxc exec mk8s2 -- microk8s kubectl get service nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx NodePort 10.152.183.58 <none> 80:32279/TCP 43m

$ lxc exec mk8s -- microk8s kubectl get service nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx NodePort 10.152.183.58 <none> 80:32279/TCP 44m

動いてるっぽいな

$ lxc list

+-------+---------+-----------------------------+----------------------------------------------+-----------+-----------+

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS |

+-------+---------+-----------------------------+----------------------------------------------+-----------+-----------+

| mk8s | RUNNING | 10.116.214.252 (eth0) | fd42:d878:2ae:7d9e:216:3eff:fe5e:733a (eth0) | CONTAINER | 1 |

| | | 10.1.215.192 (vxlan.calico) | | | |

+-------+---------+-----------------------------+----------------------------------------------+-----------+-----------+

| mk8s2 | RUNNING | 10.116.214.179 (eth0) | fd42:d878:2ae:7d9e:216:3eff:fea2:9021 (eth0) | CONTAINER | 0 |

| | | 10.1.115.128 (vxlan.calico) | | | |

+-------+---------+-----------------------------+----------------------------------------------+-----------+-----------+

$ w3m http://10.116.214.252:32279 -dump| head -n1

Welcome to nginx!

$ w3m http://10.116.214.179:32279 -dump| head -n1

Welcome to nginx!

3台以上のクラスタでHAが有効になるって書いてるな

もう一台追加してみるか

追加する時にlxc exec mk8s -- microk8s add-nodeでjoin用のコマンド発行がもう一度必要だったけど普通に出来たな

$ lxc exec mk8s -- microk8s kubectl get no

NAME STATUS ROLES AGE VERSION

mk8s2 Ready <none> 25m v1.20.5-34+40f5951bd9888a

mk8s Ready <none> 70m v1.20.5-34+40f5951bd9888a

mk8s3 Ready <none> 86s v1.20.5-34+40f5951bd9888a

HAも有効になってるな

$ lxc exec mk8s2 -- microk8s status --wait-ready | grep high-availability

high-availability: yes

$ lxc exec mk8s -- microk8s status --wait-ready | grep high-availability

high-availability: yes

$ lxc exec mk8s3 -- microk8s status --wait-ready | grep high-availability

high-availability: yes

追加はなんとなくわかったから消してみよう。。。

$ lxc exec mk8s -- microk8s leave

Generating new cluster certificates.

Waiting for node to start.

結構時間かかったけどこれで行けたんかな

$ lxc exec mk8s -- microk8s kubectl get no

NAME STATUS ROLES AGE VERSION

mk8s Ready <none> 79s v1.20.5-34+40f5951bd9888a

$ lxc exec mk8s -- microk8s kubectl get pod

No resources found in default namespace.

$ lxc exec mk8s2 -- microk8s kubectl get no

NAME STATUS ROLES AGE VERSION

mk8s3 Ready <none> 8m3s v1.20.5-34+40f5951bd9888a

mk8s NotReady <none> 76m v1.20.5-34+40f5951bd9888a

mk8s2 Ready <none> 31m v1.20.5-34+40f5951bd9888a

$ lxc exec mk8s2 -- microk8s kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-6799fc88d8-5dmjm 1/1 Running 0 72m

ん〜mk8s上から見たら消えてるっぽいけど他のクラスタからは復帰待ちみたいな状態なんかな?

あ、HAが無効化されてるか確認してなかった

$ lxc exec mk8s3 -- microk8s status --wait-ready | grep high-availability

high-availability: no

いけてるっぽいね

とりあえずもっかい繋げ直してみよ

$ lxc exec mk8s2 -- microk8s add-node

From the node you wish to join to this cluster, run the following:

microk8s join 10.116.214.179:25000/25a78d80da41a7355ebb42a9bb3b969f

If the node you are adding is not reachable through the default interface you can use one of the following:

microk8s join 10.116.214.179:25000/25a78d80da41a7355ebb42a9bb3b969f

$ lxc exec mk8s -- microk8s join 10.116.214.179:25000/25a78d80da41a7355ebb42a9bb3b969f

Contacting cluster at 10.116.214.179

Waiting for this node to finish joining the cluster. ..

$ lxc exec mk8s -- microk8s kubectl get no

NAME STATUS ROLES AGE VERSION

mk8s3 Ready <none> 15h v1.20.5-34+40f5951bd9888a

mk8s2 Ready <none> 15h v1.20.5-34+40f5951bd9888a

mk8s Ready <none> 16h v1.20.5-34+40f5951bd9888a

$ lxc exec mk8s3 -- microk8s status --wait-ready | grep high-availability

high-availability: yes

復旧したっぽいな

しかしこれマスターとノードってどうやって識別するんやろ。。。

あ、3台ともにマスターなのか

$ lxc exec mk8s -- microk8s status

microk8s is running

high-availability: yes

datastore master nodes: 10.116.214.179:19001 10.116.214.86:19001 10.116.214.252:19001

スタンバイノードもどうなるか見たいので4台目追加

$ lxc exec mk8s4 -- microk8s join 10.116.214.252:25000/40d4a93fa1168308dccd35fb267daf50

Contacting cluster at 10.116.214.252

Waiting for this node to finish joining the cluster. ..

$ lxc exec mk8s4 -- microk8s status

microk8s is running

high-availability: yes

datastore master nodes: 10.116.214.179:19001 10.116.214.86:19001 10.116.214.252:19001

datastore standby nodes: 10.116.214.208:19001

スタンバイノードに追加された

$ lxc exec mk8s4 -- microk8s kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-6799fc88d8-rhs9q 1/1 Running 0 15h

$ lxc exec mk8s4 -- microk8s kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.152.183.1 <none> 443/TCP 16h

nginx NodePort 10.152.183.58 <none> 80:32279/TCP 16h

起動したばかりだけどなんか動いてるnginxのAGEは他のノードと同じ時間なのか。。。

これ実際に動いてるのは1個だけ?

とりあえずもう一個pod追加してみようそうしよう

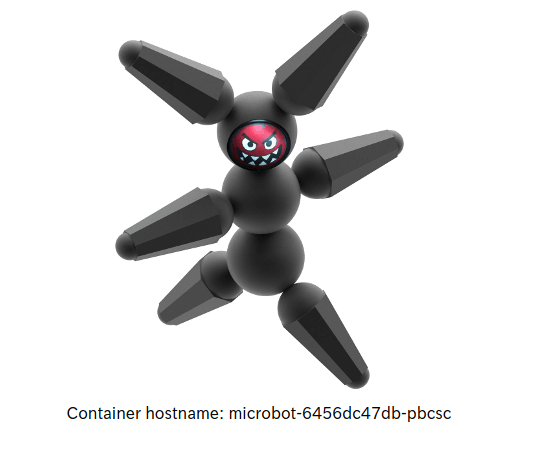

$ lxc exec mk8s4 -- microk8s kubectl create deployment microbot --image=dontrebootme/microbot:v1

deployment.apps/microbot created

$ lxc exec mk8s4 -- microk8s kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-6799fc88d8-rhs9q 1/1 Running 0 15h

microbot-5f5499d479-zfb8q 1/1 Running 0 55s

$ lxc exec mk8s -- microk8s kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-6799fc88d8-rhs9q 1/1 Running 0 15h

microbot-5f5499d479-zfb8q 1/1 Running 0 68s

$ ps -fC nginx

UID PID PPID C STIME TTY TIME CMD

root 378493 378458 0 09:12 ? 00:00:00 nginx: master process nginx -g daemon off;

systemd+ 378546 378493 0 09:12 ? 00:00:00 nginx: worker process

root 752805 752802 0 10:09 ? 00:00:00 nginx: master process /usr/sbin/nginx

systemd+ 752807 752805 0 10:09 ? 00:00:00 nginx: worker process

あーこれプロセス自体は1個なのねクラスタ全部に1個ずつ動くと思ってた

とりあえず80ポート外だししてみよう

$ lxc exec mk8s -- microk8s kubectl expose deployment microbot --type=NodePort --port=80 --name=microbot-service

service/microbot-service exposed

$ lxc exec mk8s2 -- microk8s kubectl get service microbot-service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

microbot-service NodePort 10.152.183.208 <none> 80:31939/TCP 72s

31939か

$ lxc list | grep eth0

| mk8s | RUNNING | 10.116.214.252 (eth0) | fd42:d878:2ae:7d9e:216:3eff:fe5e:733a (eth0) | CONTAINER | 1 |

| mk8s2 | RUNNING | 10.116.214.179 (eth0) | fd42:d878:2ae:7d9e:216:3eff:fea2:9021 (eth0) | CONTAINER | 0 |

| mk8s3 | RUNNING | 10.116.214.86 (eth0) | fd42:d878:2ae:7d9e:216:3eff:fe82:5e6d (eth0) | CONTAINER | 0 |

| mk8s4 | RUNNING | 10.116.214.208 (eth0) | fd42:d878:2ae:7d9e:216:3eff:fec6:fd1b (eth0) | CONTAINER | 0 |

$ w3m -dump http://10.116.214.252:31939 | grep hostname

Container hostname: microbot-5f5499d479-zfb8q

$ w3m -dump http://10.116.214.179:31939 | grep hostname

Container hostname: microbot-5f5499d479-zfb8q

どのLXDの31939ポートからでもアクセスできるけど実際に動いてるのはmicrobot-5f5499d479-zfb8qってホスト上だけってことかしら?

ふむふむ、なんか望ましい状態を設定して現在の状態をそれに近づけるように動くのか

で今の状態が

$ lxc exec mk8s2 -- microk8s kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-6799fc88d8 1 1 1 17h

microbot-5f5499d479 1 1 1 15m

でDESIREDが望ましい状態でCURRENTが現在の状態だからこれで安定してますよってことね

DESIRED増やしたらnginxの実行数も増えそうやねやってみよう

$ lxc exec mk8s -- microk8s kubectl scale --replicas=4 deployment microbot

deployment.apps/microbot scaled

$ lxc exec mk8s -- microk8s kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 1/1 1 1 17h

microbot 4/4 4 4 28m

お

$ ps -fC nginx

UID PID PPID C STIME TTY TIME CMD

root 378493 378458 0 09:12 ? 00:00:00 nginx: master process nginx -g daemon off;

systemd+ 378546 378493 0 09:12 ? 00:00:00 nginx: worker process

root 752805 752802 0 10:09 ? 00:00:00 nginx: master process /usr/sbin/nginx

systemd+ 752807 752805 0 10:09 ? 00:00:00 nginx: worker process

root 1047125 1047114 0 10:37 ? 00:00:00 nginx: master process /usr/sbin/nginx

systemd+ 1047131 1047125 0 10:37 ? 00:00:00 nginx: worker process

root 1049689 1049686 0 10:37 ? 00:00:00 nginx: master process /usr/sbin/nginx

systemd+ 1049690 1049689 0 10:37 ? 00:00:00 nginx: worker process

root 1052785 1052782 0 10:37 ? 00:00:00 nginx: master process /usr/sbin/nginx

systemd+ 1052789 1052785 0 10:37 ? 00:00:00 nginx: worker process

おおお

ちゃんと4つのインスタンスから返ってきてるっぽいね

$ for i in $(seq 100);do w3m -dump http://10.116.214.208:31939 | grep hostname ; done | sort | uniq -c

32 Container hostname: microbot-5f5499d479-bxfnh

27 Container hostname: microbot-5f5499d479-d76kz

18 Container hostname: microbot-5f5499d479-tzvh8

23 Container hostname: microbot-5f5499d479-zfb8q

どう分散されたんやろ…

$ for i in $(echo mk8s mk8s2 mk8s3 mk8s4); do echo "$i";lxc exec $i -- ps -fC nginx;echo ; done

mk8s

UID PID PPID C STIME TTY TIME CMD

root 170006 170003 0 01:09 ? 00:00:00 nginx: master process /usr/sbin/nginx

systemd+ 170007 170006 0 01:09 ? 00:00:00 nginx: worker process

root 222975 222972 0 01:37 ? 00:00:00 nginx: master process /usr/sbin/nginx

systemd+ 222976 222975 0 01:37 ? 00:00:00 nginx: worker process

mk8s2

UID PID PPID C STIME TTY TIME CMD

root 47030 47008 0 00:12 ? 00:00:00 nginx: master process nginx -g daemon off;

systemd+ 47078 47030 0 00:12 ? 00:00:00 nginx: worker process

mk8s3

UID PID PPID C STIME TTY TIME CMD

mk8s4

UID PID PPID C STIME TTY TIME CMD

root 44035 44031 0 01:37 ? 00:00:00 nginx: master process /usr/sbin/nginx

systemd+ 44036 44035 0 01:37 ? 00:00:00 nginx: worker process

root 44223 44220 0 01:37 ? 00:00:00 nginx: master process /usr/sbin/nginx

systemd+ 44224 44223 0 01:37 ? 00:00:00 nginx: worker process

mk8s何も実行されてないやん…

あ、スタンバイノードなのかなと思ったけどスタンバイノードはmk8s4やな。。。

$ lxc list | grep eth0 | awk '{print $2,$6}'

mk8s 10.116.214.252

mk8s2 10.116.214.179

mk8s3 10.116.214.86

mk8s4 10.116.214.208

$ lxc exec mk8s4 -- microk8s status | grep nodes

datastore master nodes: 10.116.214.179:19001 10.116.214.86:19001 10.116.214.252:19001

datastore standby nodes: 10.116.214.208:19001

ん〜この感じだとマスターかスタンバイかの判断基準はHAに関してだけでpodの実行対象としては適当って感じやろか

もう1個のpodもスケールアウトしてみるか

$ lxc exec mk8s -- microk8s kubectl scale --replicas=2 deployment nginx

deployment.apps/nginx scaled

$ lxc exec mk8s -- microk8s kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

microbot 4/4 4 4 51m

nginx 2/2 2 2 17h

$ for i in $(echo mk8s mk8s2 mk8s3 mk8s4); do echo "$i";lxc exec $i -- ps -fC nginx|grep root;echo ; done

mk8s

root 170006 170003 0 01:09 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 222975 222972 0 01:37 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 247273 247247 0 02:01 ? 00:00:00 nginx: master process nginx -g daemon off;

mk8s2

root 47030 47008 0 00:12 ? 00:00:00 nginx: master process nginx -g daemon off;

mk8s3

mk8s4

root 44035 44031 0 01:37 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 44223 44220 0 01:37 ? 00:00:00 nginx: master process /usr/sbin/nginx

mk8sに追加されたぞ…

もう一個増やしたら。。。

$ lxc exec mk8s -- microk8s kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

microbot 4/4 4 4 57m

nginx 3/3 3 3 17h

$ for i in $(echo mk8s mk8s2 mk8s3 mk8s4); do echo "$i";lxc exec $i -- ps -fC nginx|grep root;echo ; done

mk8s

root 170006 170003 0 01:09 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 222975 222972 0 01:37 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 247273 247247 0 02:01 ? 00:00:00 nginx: master process nginx -g daemon off;

mk8s2

root 47030 47008 0 00:12 ? 00:00:00 nginx: master process nginx -g daemon off;

mk8s3

mk8s4

root 44035 44031 0 01:37 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 44223 44220 0 01:37 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 75440 75419 0 02:07 ? 00:00:00 nginx: master process nginx -g daemon off;

mk8s4に追加された🤣

mk8s2とmk8s3なんで使わんのや

でもこの増え方からするともう一個追加したらmk8s3使いそうやね🙄

はい、予想は外れました

$ for i in $(echo mk8s mk8s2 mk8s3 mk8s4); do echo "$i";lxc exec $i -- ps -fC nginx|grep root;echo ; done

mk8s

root 170006 170003 0 01:09 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 222975 222972 0 01:37 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 247273 247247 0 02:01 ? 00:00:00 nginx: master process nginx -g daemon off;

mk8s2

root 47030 47008 0 00:12 ? 00:00:00 nginx: master process nginx -g daemon off;

root 171826 171805 0 02:11 ? 00:00:00 nginx: master process nginx -g daemon off;

mk8s3

mk8s4

root 44035 44031 0 01:37 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 44223 44220 0 01:37 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 75440 75419 0 02:07 ? 00:00:00 nginx: master process nginx -g daemon off;

どういうつもり?

思い切って倍に増やしてみたらやっと1個だけ立ち上がった

$ for i in $(echo mk8s mk8s2 mk8s3 mk8s4); do echo "$i";lxc exec $i -- ps -fC nginx|grep root;echo ; done

mk8s

root 170006 170003 0 01:09 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 222975 222972 0 01:37 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 247273 247247 0 02:01 ? 00:00:00 nginx: master process nginx -g daemon off;

root 261939 261910 0 02:13 ? 00:00:00 nginx: master process nginx -g daemon off;

mk8s2

root 47030 47008 0 00:12 ? 00:00:00 nginx: master process nginx -g daemon off;

root 171826 171805 0 02:11 ? 00:00:00 nginx: master process nginx -g daemon off;

root 174196 174172 0 02:13 ? 00:00:00 nginx: master process nginx -g daemon off;

mk8s3

root 162356 162335 0 02:14 ? 00:00:00 nginx: master process nginx -g daemon off;

mk8s4

root 44035 44031 0 01:37 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 44223 44220 0 01:37 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 75440 75419 0 02:07 ? 00:00:00 nginx: master process nginx -g daemon off;

root 82323 82292 0 02:13 ? 00:00:00 nginx: master process nginx -g daemon off;

よくわからんけど1クラスタあたり4つずつ使う感じなのか?

そういうわけでもないのね🤣

$ lxc exec mk8s -- microk8s kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 8/8 8 8 18h

microbot 8/8 8 8 67m

$ for i in $(echo mk8s mk8s2 mk8s3 mk8s4); do echo "$i";lxc exec $i -- ps -fC nginx|grep root;echo ; done

mk8s

root 170006 170003 0 01:09 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 222975 222972 0 01:37 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 247273 247247 0 02:01 ? 00:00:00 nginx: master process nginx -g daemon off;

root 261939 261910 0 02:13 ? 00:00:00 nginx: master process nginx -g daemon off;

root 265362 265359 0 02:16 ? 00:00:00 nginx: master process /usr/sbin/nginx

mk8s2

root 47030 47008 0 00:12 ? 00:00:00 nginx: master process nginx -g daemon off;

root 171826 171805 0 02:11 ? 00:00:00 nginx: master process nginx -g daemon off;

root 174196 174172 0 02:13 ? 00:00:00 nginx: master process nginx -g daemon off;

root 177331 177328 0 02:16 ? 00:00:00 nginx: master process /usr/sbin/nginx

mk8s3

root 162356 162335 0 02:14 ? 00:00:00 nginx: master process nginx -g daemon off;

root 165263 165260 0 02:16 ? 00:00:00 nginx: master process /usr/sbin/nginx

mk8s4

root 44035 44031 0 01:37 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 44223 44220 0 01:37 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 75440 75419 0 02:07 ? 00:00:00 nginx: master process nginx -g daemon off;

root 82323 82292 0 02:13 ? 00:00:00 nginx: master process nginx -g daemon off;

root 85324 85321 0 02:16 ? 00:00:00 nginx: master process /usr/sbin/nginx

よくわからないけどきっと賢い人達が考えた一番いい分散方法なのかなこれが🙄

ここでいきなり1台潰したらどうなるかな。。。

LXDのいいとこだよねこういう思い切りの良いことできるのは

$ lxc delete mk8s -f

$ lxc exec mk8s2 -- microk8s kubectl get no

NAME STATUS ROLES AGE VERSION

mk8s3 Ready <none> 17h v1.20.5-34+40f5951bd9888a

mk8s2 Ready <none> 17h v1.20.5-34+40f5951bd9888a

mk8s4 Ready <none> 80m v1.20.5-34+40f5951bd9888a

mk8s NotReady <none> 18h v1.20.5-34+40f5951bd9888a

$ lxc exec mk8s4 -- microk8s status | grep nodes

datastore master nodes: 10.116.214.179:19001 10.116.214.86:19001 10.116.214.208:19001

datastore standby nodes: none

$ lxc list | grep eth0 | awk '{print $2,$6}'

mk8s2 10.116.214.179

mk8s3 10.116.214.86

mk8s4 10.116.214.208

4がマスターノードに昇格した

時間は掛かったけどpodも8に戻った

$ lxc exec mk8s2 -- microk8s kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

microbot 8/8 8 8 75m

nginx 8/8 8 8 18h

~$ for i in $(echo mk8s mk8s2 mk8s3 mk8s4); do echo "$i";lxc exec $i -- ps -fC nginx|grep root;echo ; done

mk8s

Error: not found

mk8s2

root 47030 47008 0 00:12 ? 00:00:00 nginx: master process nginx -g daemon off;

root 171826 171805 0 02:11 ? 00:00:00 nginx: master process nginx -g daemon off;

root 174196 174172 0 02:13 ? 00:00:00 nginx: master process nginx -g daemon off;

root 177331 177328 0 02:16 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 186920 186917 0 02:24 ? 00:00:00 nginx: master process /usr/sbin/nginx

mk8s3

root 162356 162335 0 02:14 ? 00:00:00 nginx: master process nginx -g daemon off;

root 165263 165260 0 02:16 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 175673 175651 0 02:24 ? 00:00:00 nginx: master process nginx -g daemon off;

root 175778 175746 0 02:24 ? 00:00:00 nginx: master process nginx -g daemon off;

mk8s4

root 44035 44031 0 01:37 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 44223 44220 0 01:37 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 75440 75419 0 02:07 ? 00:00:00 nginx: master process nginx -g daemon off;

root 82323 82292 0 02:13 ? 00:00:00 nginx: master process nginx -g daemon off;

root 85324 85321 0 02:16 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 95005 95002 0 02:24 ? 00:00:00 nginx: master process /usr/sbin/nginx

root 95014 95011 0 02:24 ? 00:00:00 nginx: master process /usr/sbin/nginx

ノード増やして思ったんだけどmk8sってmk8s1の方がやりやすいのでNotReadyのmk8sを一旦削除して追加しよう

$ lxc exec mk8s2 -- microk8s remove-node mk8s

Removal failed. Node mk8s is registered with dqlite. Please, run first 'microk8s leave' on the departing node.

If the node is not available anymore and will never attempt to join the cluster in the future use the '--force' flag

to unregister the node while removing it.

あぁ、いきなりLXD毎削除したから普通に消せないので--forceが必要になったみたい

本当は離れるサーバ側でmicrok8s leaveコマンド打たなきゃいけないみたいね

まぁ実際問題商用で計画的に入れ替える事よりもサーバ壊れるほうが多そうだしどっちでもいいよね

$ lxc exec mk8s2 -- microk8s remove-node mk8s --force

$ lxc exec mk8s2 -- microk8s kubectl get no

NAME STATUS ROLES AGE VERSION

mk8s4 Ready <none> 91m v1.20.5-34+40f5951bd9888a

mk8s3 Ready <none> 17h v1.20.5-34+40f5951bd9888a

mk8s2 Ready <none> 17h v1.20.5-34+40f5951bd9888a

消えた消えた

$ lxc launch -p default -p mk8s ubuntu:20.04 mk8s1

Creating mk8s1

Starting mk8s1

$ lxc exec mk8s1 -- snap install microk8s --classic

microk8s (1.20/stable) v1.20.5 from Canonical✓ installed

$ lxc exec mk8s1 -- microk8s status --wait-ready | grep nodes

datastore master nodes: 127.0.0.1:19001

datastore standby nodes: none

$ lxc exec mk8s2 -- microk8s add-node

From the node you wish to join to this cluster, run the following:

microk8s join 10.116.214.179:25000/b2d3ce3e99ad4c3b909d729e61603ff8

If the node you are adding is not reachable through the default interface you can use one of the following:

microk8s join 10.116.214.179:25000/b2d3ce3e99ad4c3b909d729e61603ff8

$ lxc exec mk8s1 -- microk8s join 10.116.214.179:25000/b2d3ce3e99ad4c3b909d729e61603ff8

Contacting cluster at 10.116.214.179

Waiting for this node to finish joining the cluster. ..

$ lxc exec mk8s1 -- microk8s kubectl get no

NAME STATUS ROLES AGE VERSION

mk8s3 Ready <none> 17h v1.20.5-34+40f5951bd9888a

mk8s2 Ready <none> 17h v1.20.5-34+40f5951bd9888a

mk8s4 Ready <none> 98m v1.20.5-34+40f5951bd9888a

mk8s1 Ready <none> 24s v1.20.5-34+40f5951bd9888a

$ lxc exec mk8s1 -- microk8s status --wait-ready | grep nodes

datastore master nodes: 10.116.214.179:19001 10.116.214.86:19001 10.116.214.208:19001

datastore standby nodes: 10.116.214.67:19001

OKOK

どこでどれが実行されてるかこれで見たら早かった

$ lxc exec mk8s1 -- microk8s kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-6799fc88d8-rhs9q 1/1 Running 0 17h 10.1.115.130 mk8s2 <none> <none>

microbot-5f5499d479-bxfnh 1/1 Running 0 68m 10.1.236.130 mk8s4 <none> <none>

microbot-5f5499d479-d76kz 1/1 Running 0 68m 10.1.236.129 mk8s4 <none> <none>

nginx-6799fc88d8-g942q 1/1 Running 0 38m 10.1.236.131 mk8s4 <none> <none>

nginx-6799fc88d8-hrd5j 1/1 Running 0 34m 10.1.115.131 mk8s2 <none> <none>

nginx-6799fc88d8-5snc5 1/1 Running 0 31m 10.1.115.132 mk8s2 <none> <none>

nginx-6799fc88d8-wqpb4 1/1 Running 0 31m 10.1.236.132 mk8s4 <none> <none>

nginx-6799fc88d8-lvxnb 1/1 Running 0 31m 10.1.217.196 mk8s3 <none> <none>

microbot-5f5499d479-74bql 1/1 Running 0 29m 10.1.236.133 mk8s4 <none> <none>

microbot-5f5499d479-2fzk8 1/1 Running 0 29m 10.1.217.197 mk8s3 <none> <none>

microbot-5f5499d479-df8cl 1/1 Running 0 29m 10.1.115.133 mk8s2 <none> <none>

microbot-5f5499d479-s5zrm 1/1 Running 0 20m 10.1.236.134 mk8s4 <none> <none>

microbot-5f5499d479-zgpgq 1/1 Running 0 20m 10.1.115.134 mk8s2 <none> <none>

microbot-5f5499d479-n7tx7 1/1 Running 0 20m 10.1.236.135 mk8s4 <none> <none>

nginx-6799fc88d8-zjt6r 1/1 Running 0 20m 10.1.217.198 mk8s3 <none> <none>

nginx-6799fc88d8-jstm7 1/1 Running 0 20m 10.1.217.199 mk8s3 <none> <none>

これでpod毎の情報が見れる感じやな

$ lxc exec mk8s1 -- microk8s kubectl describe deployments/microbot

Name: microbot

Namespace: default

CreationTimestamp: Thu, 15 Apr 2021 01:09:41 +0000

Labels: app=microbot

Annotations: deployment.kubernetes.io/revision: 1

Selector: app=microbot

Replicas: 8 desired | 8 updated | 8 total | 8 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=microbot

Containers:

microbot:

Image: dontrebootme/microbot:v1

Port: <none>

Host Port: <none>

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

Progressing True NewReplicaSetAvailable

Available True MinimumReplicasAvailable

OldReplicaSets: <none>

NewReplicaSet: microbot-5f5499d479 (8/8 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 38m deployment-controller Scaled up replica set microbot-5f5499d479 to 8

アプリのバージョンアップも簡単に出来そう

とりあえずややこしいからnginxの方を消すか

$ lxc exec mk8s1 -- microk8s kubectl delete deploy/nginx

deployment.apps "nginx" deleted

$ lxc exec mk8s1 -- microk8s kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

microbot-5f5499d479-bxfnh 1/1 Running 0 174m 10.1.236.130 mk8s4 <none> <none>

microbot-5f5499d479-d76kz 1/1 Running 0 174m 10.1.236.129 mk8s4 <none> <none>

microbot-5f5499d479-74bql 1/1 Running 0 135m 10.1.236.133 mk8s4 <none> <none>

microbot-5f5499d479-2fzk8 1/1 Running 0 135m 10.1.217.197 mk8s3 <none> <none>

microbot-5f5499d479-df8cl 1/1 Running 0 135m 10.1.115.133 mk8s2 <none> <none>

microbot-5f5499d479-s5zrm 1/1 Running 0 127m 10.1.236.134 mk8s4 <none> <none>

microbot-5f5499d479-zgpgq 1/1 Running 0 127m 10.1.115.134 mk8s2 <none> <none>

microbot-5f5499d479-n7tx7 1/1 Running 0 127m 10.1.236.135 mk8s4 <none> <none>

microbotをv1からv2にしてみよう

$ lxc exec mk8s1 -- microk8s kubectl describe deploy/microbot

Name: microbot

Namespace: default

CreationTimestamp: Thu, 15 Apr 2021 01:09:41 +0000

Labels: app=microbot

Annotations: deployment.kubernetes.io/revision: 1

Selector: app=microbot

Replicas: 8 desired | 8 updated | 8 total | 8 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=microbot

Containers:

microbot:

Image: dontrebootme/microbot:v1

Port: <none>

Host Port: <none>

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

Progressing True NewReplicaSetAvailable

Available True MinimumReplicasAvailable

OldReplicaSets: <none>

NewReplicaSet: microbot-5f5499d479 (8/8 replicas created)

Events: <none>

$ lxc exec mk8s1 -- microk8s kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

microbot-5f5499d479-bxfnh 1/1 Running 0 178m 10.1.236.130 mk8s4 <none> <none>

microbot-5f5499d479-d76kz 1/1 Running 0 178m 10.1.236.129 mk8s4 <none> <none>

microbot-5f5499d479-2fzk8 1/1 Running 0 139m 10.1.217.197 mk8s3 <none> <none>

microbot-5f5499d479-n7tx7 1/1 Terminating 0 131m 10.1.236.135 mk8s4 <none> <none>

microbot-5f5499d479-s5zrm 1/1 Terminating 0 131m 10.1.236.134 mk8s4 <none> <none>

microbot-6456dc47db-xk5j2 0/1 ContainerCreating 0 17s <none> mk8s2 <none> <none>

microbot-6456dc47db-brzkh 0/1 ContainerCreating 0 17s <none> mk8s1 <none> <none>

microbot-6456dc47db-lmt6r 1/1 Running 0 17s 10.1.238.131 mk8s1 <none> <none>

microbot-5f5499d479-zgpgq 1/1 Terminating 0 131m 10.1.115.134 mk8s2 <none> <none>

microbot-6456dc47db-24xkb 1/1 Running 0 6s 10.1.238.132 mk8s1 <none> <none>

microbot-5f5499d479-74bql 1/1 Terminating 0 139m 10.1.236.133 mk8s4 <none> <none>

microbot-6456dc47db-rzxng 1/1 Running 0 17s 10.1.238.129 mk8s1 <none> <none>

microbot-5f5499d479-df8cl 1/1 Terminating 0 139m 10.1.115.133 mk8s2 <none> <none>

microbot-6456dc47db-p8fpx 0/1 ContainerCreating 0 3s <none> mk8s3 <none> <none>

microbot-6456dc47db-pbcsc 0/1 ContainerCreating 0 2s <none> mk8s2 <none> <none>

Terminatingが実行中でContainerCreatingが新しいやつかなちょっと待ってみるか

~$ lxc exec mk8s1 -- microk8s kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

microbot-6456dc47db-lmt6r 1/1 Running 0 91s 10.1.238.131 mk8s1 <none> <none>

microbot-6456dc47db-24xkb 1/1 Running 0 80s 10.1.238.132 mk8s1 <none> <none>

microbot-6456dc47db-rzxng 1/1 Running 0 91s 10.1.238.129 mk8s1 <none> <none>

microbot-6456dc47db-xk5j2 1/1 Running 0 91s 10.1.115.135 mk8s2 <none> <none>

microbot-6456dc47db-pbcsc 1/1 Running 0 76s 10.1.115.136 mk8s2 <none> <none>

microbot-6456dc47db-brzkh 1/1 Running 0 91s 10.1.238.130 mk8s1 <none> <none>

microbot-6456dc47db-lg69j 1/1 Running 0 73s 10.1.238.133 mk8s1 <none> <none>

microbot-6456dc47db-p8fpx 1/1 Running 0 77s 10.1.217.200 mk8s3 <none> <none>

落ち着いたみたい

$ lxc exec mk8s1 -- microk8s kubectl describe deploy/microbot

Name: microbot

Namespace: default

CreationTimestamp: Thu, 15 Apr 2021 01:09:41 +0000

Labels: app=microbot

Annotations: deployment.kubernetes.io/revision: 2

Selector: app=microbot

Replicas: 8 desired | 8 updated | 8 total | 8 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=microbot

Containers:

microbot:

Image: dontrebootme/microbot:v2

Port: <none>

Host Port: <none>

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets: <none>

NewReplicaSet: microbot-6456dc47db (8/8 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 118s deployment-controller Scaled up replica set microbot-6456dc47db to 2

Normal ScalingReplicaSet 118s deployment-controller Scaled down replica set microbot-5f5499d479 to 6

Normal ScalingReplicaSet 118s deployment-controller Scaled up replica set microbot-6456dc47db to 4

Normal ScalingReplicaSet 107s deployment-controller Scaled down replica set microbot-5f5499d479 to 5

Normal ScalingReplicaSet 107s deployment-controller Scaled up replica set microbot-6456dc47db to 5

Normal ScalingReplicaSet 104s deployment-controller Scaled down replica set microbot-5f5499d479 to 4

Normal ScalingReplicaSet 104s deployment-controller Scaled up replica set microbot-6456dc47db to 6

Normal ScalingReplicaSet 103s deployment-controller Scaled down replica set microbot-5f5499d479 to 3

Normal ScalingReplicaSet 103s deployment-controller Scaled up replica set microbot-6456dc47db to 7

Normal ScalingReplicaSet 99s (x4 over 100s) deployment-controller (combined from similar events): Scaled down replica set microbot-5f5499d479 to 0

Image: dontrebootme/microbot:v2

になってるから成功っぽいね凄いなこれ。。。

w3mじゃ何が変わったかわからんね画像が変わるのか。。。

v1に戻して画像確認してみよう

$ lxc exec mk8s1 -- microk8s kubectl set image deployments/microbot microbot=dontrebootme/microbot:v1

deployment.apps/microbot image updated

$ lxc exec mk8s1 -- microk8s kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

microbot-5f5499d479-tr4mh 1/1 Running 0 63s 10.1.236.139 mk8s4 <none> <none>

microbot-5f5499d479-9wt6n 1/1 Running 0 63s 10.1.236.138 mk8s4 <none> <none>

microbot-5f5499d479-mrzsg 1/1 Running 0 63s 10.1.236.137 mk8s4 <none> <none>

microbot-5f5499d479-bb72t 1/1 Running 0 63s 10.1.236.136 mk8s4 <none> <none>

microbot-5f5499d479-2kk6b 1/1 Running 0 55s 10.1.236.140 mk8s4 <none> <none>

microbot-5f5499d479-9r9x2 1/1 Running 0 54s 10.1.115.138 mk8s2 <none> <none>

microbot-5f5499d479-n6nfj 1/1 Running 0 55s 10.1.115.137 mk8s2 <none> <none>

microbot-5f5499d479-zfj8q 1/1 Running 0 55s 10.1.217.201 mk8s3 <none> <none>

おぉー

なんかこの時点で気づいたけどこれアプリのローリングアップデート時に空いてる方のクラスタで新規に立ち上げてるなこのために余力を残してる感じがする

pod名でDockerコンテナに直接exec出来るっぽいな

$ lxc exec mk8s1 -- microk8s kubectl get pods

NAME READY STATUS RESTARTS AGE

microbot-5f5499d479-tr4mh 1/1 Running 0 61m

microbot-5f5499d479-9wt6n 1/1 Running 0 61m

microbot-5f5499d479-mrzsg 1/1 Running 0 61m

microbot-5f5499d479-bb72t 1/1 Running 0 61m

microbot-5f5499d479-2kk6b 1/1 Running 0 61m

microbot-5f5499d479-9r9x2 1/1 Running 0 61m

microbot-5f5499d479-n6nfj 1/1 Running 0 61m

microbot-5f5499d479-zfj8q 1/1 Running 0 61m

$ lxc exec mk8s1 -- microk8s kubectl exec -it microbot-5f5499d479-tr4mh -- cat /etc/os-release

NAME="Alpine Linux"

ID=alpine

VERSION_ID=3.2.0

PRETTY_NAME="Alpine Linux v3.2"

HOME_URL="http://alpinelinux.org"

BUG_REPORT_URL="http://bugs.alpinelinux.org"

これlxdコンテナ再起動したらどうなるんかな。。。

$ lxc exec mk8s4 -- shutdown now

$ lxc exec mk8s1 -- microk8s kubectl get node

NAME STATUS ROLES AGE VERSION

mk8s3 Ready <none> 20h v1.20.5-34+40f5951bd9888a

mk8s1 Ready <none> 3h19m v1.20.5-34+40f5951bd9888a

mk8s2 Ready <none> 21h v1.20.5-34+40f5951bd9888a

mk8s4 NotReady <none> 4h57m v1.20.5-34+40f5951bd9888a

$ lxc exec mk8s1 -- microk8s kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

microbot-5f5499d479-9r9x2 1/1 Running 0 104m 10.1.115.138 mk8s2 <none> <none>

microbot-5f5499d479-n6nfj 1/1 Running 0 104m 10.1.115.137 mk8s2 <none> <none>

microbot-5f5499d479-zfj8q 1/1 Running 0 104m 10.1.217.201 mk8s3 <none> <none>

microbot-5f5499d479-mrzsg 1/1 Terminating 1 104m 10.1.236.143 mk8s4 <none> <none>

microbot-5f5499d479-tr4mh 1/1 Terminating 1 104m 10.1.236.142 mk8s4 <none> <none>

microbot-5f5499d479-bb72t 1/1 Terminating 1 104m 10.1.236.145 mk8s4 <none> <none>

microbot-5f5499d479-2kk6b 1/1 Terminating 1 104m 10.1.236.144 mk8s4 <none> <none>

microbot-5f5499d479-9wt6n 1/1 Terminating 1 104m 10.1.236.141 mk8s4 <none> <none>

microbot-5f5499d479-wsngt 1/1 Running 0 29m 10.1.115.139 mk8s2 <none> <none>

microbot-5f5499d479-9dl68 1/1 Running 0 29m 10.1.238.135 mk8s1 <none> <none>

microbot-5f5499d479-qnjlf 1/1 Running 0 29m 10.1.238.134 mk8s1 <none> <none>

microbot-5f5499d479-fwgnx 1/1 Running 0 29m 10.1.238.136 mk8s1 <none> <none>

microbot-5f5499d479-cnk6b 1/1 Running 0 29m 10.1.238.137 mk8s1 <none> <none>

死んだの検知したら他のに分散されて8個立ち上がるようにはなってるんやね

$ lxc start mk8s4

$ lxc exec mk8s1 -- microk8s kubectl get node

NAME STATUS ROLES AGE VERSION

mk8s3 Ready <none> 21h v1.20.5-34+40f5951bd9888a

mk8s2 Ready <none> 21h v1.20.5-34+40f5951bd9888a

mk8s1 Ready <none> 3h57m v1.20.5-34+40f5951bd9888a

mk8s4 Ready <none> 5h35m v1.20.5-34+40f5951bd9888a

起動したらReadyになった

$ lxc exec mk8s1 -- microk8s kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

microbot-5f5499d479-9r9x2 1/1 Running 0 109m 10.1.115.138 mk8s2 <none> <none>

microbot-5f5499d479-n6nfj 1/1 Running 0 109m 10.1.115.137 mk8s2 <none> <none>

microbot-5f5499d479-zfj8q 1/1 Running 0 109m 10.1.217.201 mk8s3 <none> <none>

microbot-5f5499d479-wsngt 1/1 Running 0 34m 10.1.115.139 mk8s2 <none> <none>

microbot-5f5499d479-9dl68 1/1 Running 0 34m 10.1.238.135 mk8s1 <none> <none>

microbot-5f5499d479-qnjlf 1/1 Running 0 34m 10.1.238.134 mk8s1 <none> <none>

microbot-5f5499d479-fwgnx 1/1 Running 0 34m 10.1.238.136 mk8s1 <none> <none>

microbot-5f5499d479-cnk6b 1/1 Running 0 34m 10.1.238.137 mk8s1 <none> <none>

Terminatingで残ってたコンテナ群も消えたね何事もなかったかのように復旧したみたい便利だなぁ

あ、オートスケールとかあるやん

やってみよ

$ lxc exec mk8s1 -- microk8s kubectl autoscale deployment microbot --min=2 --max=10

horizontalpodautoscaler.autoscaling/microbot autoscaled

dev@dev-virtual-machine:~$ lxc exec mk8s1 -- microk8s kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

microbot Deployment/microbot <unknown>/80% 2 10 0 2s

これで最小2、最大10になる??

$ lxc exec mk8s3 -- microk8s kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

microbot-5f5499d479-zfj8q 1/1 Running 0 179m 10.1.217.201 mk8s3 <none> <none>

microbot-5f5499d479-rrvnm 1/1 Running 0 16m 10.1.238.139 mk8s1 <none> <none>

microbot-5f5499d479-b55pw 1/1 Running 0 78s 10.1.115.141 mk8s2 <none> <none>

microbot-5f5499d479-c8gb9 1/1 Running 0 78s 10.1.238.140 mk8s1 <none> <none>

microbot-5f5499d479-w8t9r 1/1 Running 0 78s 10.1.236.150 mk8s4 <none> <none>

microbot-5f5499d479-xt7wn 1/1 Running 0 78s 10.1.236.148 mk8s4 <none> <none>

microbot-5f5499d479-gmq5q 1/1 Running 0 78s 10.1.236.149 mk8s4 <none> <none>

microbot-5f5499d479-g4t22 1/1 Running 0 78s 10.1.238.141 mk8s1 <none> <none>

ならないね??

とりあえずscaleで2にしてみよか

$ lxc exec mk8s1 -- microk8s kubectl scale --replicas=2 deployment/microbot

deployment.apps/microbot scaled

$ lxc exec mk8s3 -- microk8s kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

microbot-5f5499d479-zfj8q 1/1 Running 0 3h3m 10.1.217.201 mk8s3 <none> <none>

microbot-5f5499d479-wmrlj 1/1 Running 0 94s 10.1.115.142 mk8s2 <none> <none>

一応2個になったけどこれ縮退自動ではならんのか🤔

謎が多いな。。。

とりあえず負荷掛けてみよう

$ ab -n 1000000 -c 100 http://10.116.214.67:31939/

This is ApacheBench, Version 2.3 <$Revision: 1843412 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking 10.116.214.67 (be patient)

Completed 100000 requests

Completed 200000 requests

Completed 300000 requests

Completed 400000 requests

Completed 500000 requests

Completed 600000 requests

Completed 700000 requests

Completed 800000 requests

Completed 900000 requests

Completed 1000000 requests

Finished 1000000 requests

Server Software: nginx/1.8.0

Server Hostname: 10.116.214.67

Server Port: 31939

Document Path: /

Document Length: 351 bytes

Concurrency Level: 100

Time taken for tests: 358.385 seconds

Complete requests: 1000000

Failed requests: 0

Total transferred: 583000000 bytes

HTML transferred: 351000000 bytes

Requests per second: 2790.29 [#/sec] (mean)

Time per request: 35.839 [ms] (mean)

Time per request: 0.358 [ms] (mean, across all concurrent requests)

Transfer rate: 1588.61 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 16 4.1 16 90

Processing: 0 20 5.6 19 114

Waiting: 0 15 5.5 13 92

Total: 0 36 6.2 35 169

Percentage of the requests served within a certain time (ms)

50% 35

66% 36

75% 37

80% 38

90% 42

95% 46

98% 53

99% 58

100% 169 (longest request)

$ lxc exec mk8s3 -- microk8s kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

microbot-5f5499d479-zfj8q 1/1 Running 0 3h28m 10.1.217.201 mk8s3 <none> <none>

microbot-5f5499d479-wmrlj 1/1 Running 0 26m 10.1.115.142 mk8s2 <none> <none>

流石c10k問題で知名度浴びたnginxやね全然負荷かからないでやんの🤣

どうやって確認しよかな。。。

なんかとっちらかってきたな。。。。

多分要約したら大したことしてないはずだから記事化しよう

とりあえず記事化したからここは一旦閉じよう