Automatic margin removal in images with Python

私たちの研究室

アドベントカレンダー21日目〜

Automatic margin removal in images with Python

Article by エスカーニョ マルケス、ユイス

In this article I will explain a little project of mine that consists on automating the removal of blank edges in images.

TL;DR:

We generate a binary mask from the image, to indicate if the pixel has color or not, and then we use that information to find pixel groups. After, we detect the beginning and ending of those groups to get the biggest possible boundary, which contains the original graphic without borders, and we just cut it over the original image.

My problem

My problem was that I have multiple drawings that are not centered and have white margins, but I needed perfectly cut images to integrate in my websites, therefore, I developed a small python script to accomplish this task.

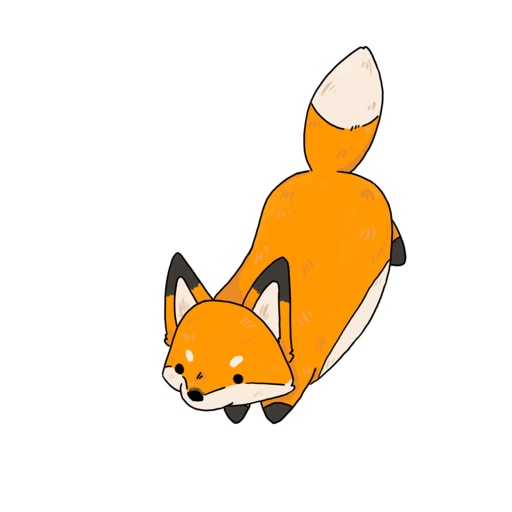

For example, the following image:

While it might not be obvious at first sight, this image has two main issues. The first one is that there is extra blank space at the borders, which makes it hard to have consistency between different graphics as each will have a different margin size. The second problem is that the image is not centered, which complicates the integration inside a software application or webpage as its position won't be consistent.

This can be fixed by using a photo editor such as GIMP or Photoshop. But it is a tedious process, and it's not convenient if you want to correct a lot of images, therefore today we will automate this process with a Python script.

How we will achieve this

There are multiple techniques that can be used to achieve our goal, but we will use a very simple and intuitive mechanism:

We will cut the white borders until we reach a non-white pixels. By doing so, we will get an image with zero margins, and thus the image will feel centered, and will loose the borders.

To do so, we will follow these two steps:

- Read the image data

- Detect the left, top, right, and bottom positions of the image.

- Cut and save the result.

0. Read the image data

For this task we will use two libraries, OpenCV and NumPy. OpenCV will handle the image loading and transformations, while NumPy will allow us to do matrix operations with Python.

input_path = "kitsune.png"

image = cv2.imread(input_path, cv2.IMREAD_UNCHANGED)

imgarr = np.array(cv2.cvtColor(image, cv2.COLOR_BGR2GRAY))

image is a 3D Matrix. The first dimension is the pixel row, the second dimension is the pixel column, and the third dimension is the RGB color tuple, stored as (Blue, Green, Red) because OpenCV likes to change the order of the colors to optimize its internal functions. The matrix scheme is: [[[B, G, R], ...], ...]

imgarr is a NumPy 2D Matrix without color, in grayscale. This is because we don't need color information to calculate the boundaries of the image. We keep the original image in image for when we finish calculating all the cuts, so we can apply them on the color version. The matrix scheme is: [[value, ...], ...]

1. Detect the left, top, right, and bottom positions of the image.

We want to detect the following boundary box:

To detect the borders we will use the argwhere function from NumPy, that automatically detects groups of zero (0) elements inside a matrix. Let's follow step by step.

First and foremost, we now have a 2D matrix in imgarr with the grayscale colors, which are values from 0 to 255 with the lightness of each pixel, where 0 is black and 255 is white. Each row is a row in the original file. We will use this matrix to generate a binary mask. The mask will be another 2D matrix of the same size as imgarr, but will only contain 0 or 1 depending if the image has a blank pixel or not.

tolerance = 10 # [1 ↔ 254]

mask = (imgarr < (255-tolerance))

To this point, we now have our 2D matrix that assigns either 0 if the image pixel not white, and 1 if the pixel is white. Tolerance is an arbitrary factor to determine if the color is white, values below it will be considered white.

Now comes the interesting part. We could do a double for loop to find the top row limit:

# [BAD] Non-optimal solution

top = 0

for row in mask:

for value in row:

if value == 0:

break

top += 1

Then repeat the same trick for bottom, and then adjust it for left and right. However, we can do a smart use of NumPy's mathematical methods, letting it find groups of zeros for us, and then just take the biggest possible group.

# Coordinates of non-black pixels.

coords = np.argwhere(mask)

# Bounding box of non-black pixels.

x0, y0 = coords.min(axis=0)

x1, y1 = coords.max(axis=0) + 1 # slices are exclusive at the top

We then calculate the min and max of the found groups, the min will indicate the first found position where the group starts. In the case of top to bottom, that is the top, and in the case of left to right that is the left. Then we do the same with max, that finds the last position of the group.

And now we have x0, y0, x1, and y1 with the value of the biggest possible bounding box. We are ready to jump to the next stage.

2. Cut and save the result.

This part is very easy after having done all the work calculating the positions. We will work with the original image data in image. Image is a 3D Matrix, but we will not care about the colors so we will treat it as a 2D Matrix and take the values at the intervals between x0 and x1 on columns, and y0 and y1 on rows.

cropped = image[x0:x1, y0:y1]

And then we save the image:

target = "output.png"

cv2.imwrite(target, cropped, [cv2.IMWRITE_PNG_COMPRESSION, 9])

Results

The resulting image is a properly cropped graphic:

After this, we can polish our code to add more functionality, such as handling black borders instead of white, or transparent pixels instead of black/white. Moreover, we can wrap the functionality in a console utility with proper parameter parsing to make it more convenient to use.

You may find my source code with the added extras at: github.com/lluises/imgauto

Conclusion

In conclusion, today we learned how to detect boundaries in an image, and use that information to crop the margins within that image. To do so, we generate a binary mask from the original image, then use argwhere from NumPy to automatically detect groups. Finally, we crop the original image according to the first and last positions of the found groups, which are the biggest possible boundary.

Full example code

import cv2

import numpy as np

input_path = "kitsune.png"

# Load the image

image = cv2.imread(input_path, cv2.IMREAD_UNCHANGED)

imgarr = np.array(cv2.cvtColor(image, cv2.COLOR_BGR2GRAY))

# Generate the binary mask

tolerance = 10 # [1 ↔ 254]

mask = (imgarr < (255-tolerance))

# Coordinates of non-black pixels.

coords = np.argwhere(mask)

# Bounding box of non-black pixels.

x0, y0 = coords.min(axis=0)

x1, y1 = coords.max(axis=0) + 1 # slices are exclusive at the top

# Crop

cropped = image[x0:x1, y0:y1]

# Save

target = "output.png"

cv2.imwrite(target, cropped, [cv2.IMWRITE_PNG_COMPRESSION, 9])

You may find my source code with the added extras at: github.com/lluises/imgauto

The end

Article by エスカーニョ マルケス、ユイス

▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁

░▇▇▇▇▇▇▇▇▇░░░░░░░░░░░░░▇▇▇░░░▇▇▇▇▇▇▇▇▇▇▇░

░░░░░░░░▇▇░░░░░░░░▇▇▇▇▇░░░░░░░░░░░░░░▇▇░░

░░░░░░░░▇▇░░░░░▇▇▇░░▇▇░░░░░░░░░░░░░▇▇░░░░

░░░░░░░░▇▇░░░░░░░░░░▇▇░░░░░░░░░░░▇▇▇░░░░░

░░░░░░░░▇▇░░░░░░░░░░▇▇░░░░░░░░░▇▇░░░▇▇░░░

░▇▇▇▇▇▇▇▇▇▇▇░░░░░░░░▇▇░░░░░░░▇▇░░░░░░░▇▇░

▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔

Discussion